Development and validation of interpretable machine learning models for inpatient fall events and electronic medical record integration

Article information

Abstract

Objective

Falls are one of the most frequently occurring adverse events among hospitalized patients. The Morse Fall Scale, which has been widely used for fall risk assessment, has the two limitations of low specificity and difficulty in practical implementation. The aim of this study was to develop and validate an interpretable machine learning model for prediction of falls to be integrated in an electronic medical record (EMR) system.

Methods

This was a retrospective study involving a tertiary teaching hospital in Seoul, Korea. Based on the literature, 83 known predictors were grouped into seven categories. Interpretable fall event prediction models were developed using multiple machine learning models including gradient boosting and Shapley values.

Results

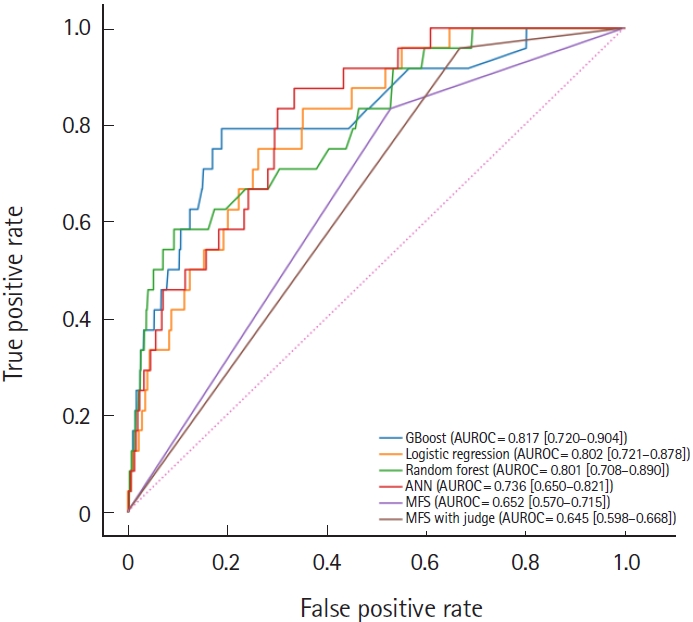

Overall, 191,778 cases with 272 fall events (0.1%) were included in the analysis. With the validation cohort of 2020, the area under the receiver operating curve (AUROC) of the gradient boosting model was 0.817 (95% confidence interval [CI], 0.720–0.904), better performance than random forest (AUROC, 0.801; 95% CI, 0.708–0.890), logistic regression (AUROC, 0.802; 95% CI, 0.721–0.878), artificial neural net (AUROC, 0.736; 95% CI, 0.650–0.821), and conventional Morse fall score (AUROC, 0.652; 95% CI, 0.570–0.715). The model’s interpretability was enhanced at both the population and patient levels. The algorithm was later integrated into the current EMR system.

Conclusion

We developed an interpretable machine learning prediction model for inpatient fall events using EMR integration formats.

INTRODUCTION

Falls are one of the most frequently occurring adverse events in hospitalized patients [1], extending hospital stays, increasing medical costs, and increasing disability and mortality [2,3]. In the United States, falls occur at a rate of 3.3 to 11.5 per 1,000 hospital days [4]. Among patient safety accidents in Korea, falls were reported most frequently over the past 5 years, accounting for 45% or more of such incidents, with more than two-thirds resulting in mild or more serious injuries [5].

Nurses must assess patient fall risk and, if necessary, provide appropriate caution and education. According to the Joint Commission on Healthcare Organization Accreditation and the Korea Institute for Healthcare Accreditation, falls are critical incidents, and preventing falls must be highly prioritized as a hospital policy [6,7]. The Morse Fall Scale (MFS) is the most widely used fall risk assessment scale and is used along with other tools [8].

The MFS focuses primarily on intrinsic factors that are related to individual factors such as history of falls or polypharmacy [9], and it poses major limitations in two aspects. First, the prediction accuracy of MFS varies significantly among healthcare settings and patient groups [10,11], complicating its application due to the need for real-time intervention to respond to changing patient conditions. Second, because the MFS does not focus on individual risk factors [12], nurses must apply a broad fall prevention plan without necessarily focusing on individual patient risk factors.

Advances in data science have contributed to the development of accurate fall risk prediction models; other studies created ensemble models or extreme gradient boosting models and identified significant predictors such as low self-care ability, sleep disorders, and medication use [13,14]. Though with acceptable accuracy, previous machine learning (ML) studies have limitations in their explainability for clinicians and nurses, which is also called the “black box problem.” Another challenge is that previous models have not been well-prepared for electronic medical record (EMR) integration for practical application [15,16].

To solve these limitations, the authors developed a model for predicting falls using interpretable ML and integrating the model into the EMR system to perform nursing interventions for each risk factor.

METHODS

Ethical statements

The study was approved by the Institutional Review Board of Samsung Medical Center (No. SMC 2022-03-052-001). Because of the retrospective nature of the study, the need for participant consent was waived.

Study setting

This retrospective study was conducted in Samsung Medical Center, a tertiary academic hospital in Seoul, Korea. The hospital had approximately 2,000 inpatient beds. Patient data from six general medical surgical wards with frequent fall reports were collected. Data were obtained from the EMRs. The TRIPOD (Transparent Reporting of a Multivariable Prediction Model for Individual Prognosis or Diagnosis) statement was followed for development and reporting of multivariable prediction models [17].

Study population

All patients who were admitted to the six general wards from January 2018 to March 2020 were included in the study. Patients aged <18 years, those who had a length of stay <24 hours, and those with multiple fall injuries during the same admission were excluded. If patients were admitted more than once, each admission was evaluated independently. These admissions were split into two nonoverlapping cohorts for temporal validation: a development cohort from January 2018 to December 2019 and a testing cohort from January 2020 to March 2020 for evaluation of the model.

Candidate predictors

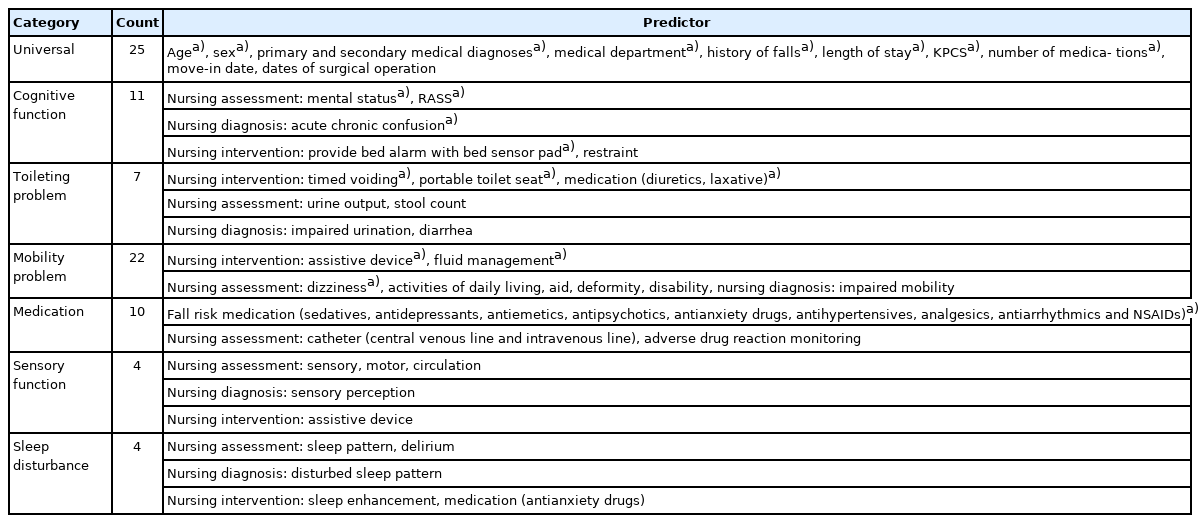

Eighty-three candidate predictors were originally suggested based on a conceptual model of inpatient fall risk concepts from multiple national fall prevention guidelines in the United States and Korea [18]. Demographic and admission data, physician orders, and nurse records were selected as potential predictors. These variables were collected from patient data in the EMR via flowsheets, medical diagnoses, nursing care plans, and free-text notes. In previous studies, nurse intervention for fall prevention was reported to be an important variable [18,19] in the occurrence of falls, and a nursing care plan was used in this study. Here, as shown in Fig. 1, each candidate predictor was defined within 24 hours from admission with a 1-hour timeframe for predicting number of falls every hour.

Dataset generation for each variable from one admission. The time window was defined with 24 hours from the admission time and regenerated every 1 hour with updated value.

Each circle represents a candidate predictor, and the number of circles is how many times the predictor was measured within 24 hours. The first prediction result can be calculated 24 hours after admission, defined as prediction index time.

For practical application by clinical providers, these 83 candidate predictors were mapped into seven categories of universal, cognitive function, defecation problems, mobility problems, medication, sensory function, and sleep disturbance according to “Evidence based clinical nursing practice guideline of Korea Hospital Nurses Association.” [20] The list of predictors with known clinical and statistical significance in the model is presented in Table 1. Nursing assessments and interventions frequently were recorded for each shift, and the records of initial nursing evaluation at the time of admission were used. In addition, the Korean Patient Classification System (KPCS) score, which is recorded for inpatients every day, was also used. The predictor with the highest contribution was nursing intervention.

Outcomes of prediction models

Two distinct sources were used to collect primary outcomes for predicting falls. One was an incident reporting system. The other was regular expression of 11 fall-related terms, such as “fallen” and “slipped,” from a free-text nursing record. Fall events were manually crosschecked by two nurses.

Statistical analysis

For statistical analysis, Python ver. 3.6.0 (Python Software Foundation, Wilmington, DE, USA) and SQL ver. 3.6.6 were used. Continuous variables were described as mean and standard deviation. Categorical variables were described as frequency and percentage. The t-test and chi-square test were used to calculate the P-value, and P<0.05 was considered statistically significant.

The development cohort was used to develop the prediction model, and the testing cohort was used to optimize the hyperparameter. Then, the performance metrics of the final model were calculated based on the testing cohort.

During the analysis, missing values for KPCS score and fluid management were imputed with the most recent nonmissing value; 0 was imputed for other missing count values.

ML models

Adapted from a previous pilot study that used the Bayesian network model derived from January 2017 to June 2018 and showed 0.93 area under the receiver operating characteristic (AUROC) performance, an eXtreme Gradient Boosting (XGBoost) model, was developed as a fast and scalable ML technique for tree-based ensemble models.

Hyperparameter tuning for XGBoost was conducted considering the grid search of maximum depth, number of estimators, and learning rate, with the highest AUROC performance in the 10-fold cross-validation set.

The AUROC and area under the precision-recall curve (AUPRC) in the validation datasets were calculated. To obtain 95% confidence intervals (CIs), 500 bootstrap repetitions were conducted. Sensitivity and specificity were calculated using the Youden index, which is defined as the point nearest the upper left corner of the ROC curve.

The prediction models were compared with the conventional point-based MFS, which consists of six evaluation items of history of falling, secondary disease, ambulatory aid, intravenous therapy/heparin lock, gait, and mental status [21] with and without nurse judgment. To supplement the MFS, the hospital identified highrisk individuals based on clinical judgments by the nurse.

Furthermore, other traditional ML methods were used for comparison. L2 regularized logistic regression, random forest, and essential artificial neural network (three layers) were performed with default settings. The software packages implemented for model development and validation were Python ver. 3.8.5, TensorFlow ver. 2.3.1, and scikit-learn ver. 0.23.2.

Model explainability for EMR integration

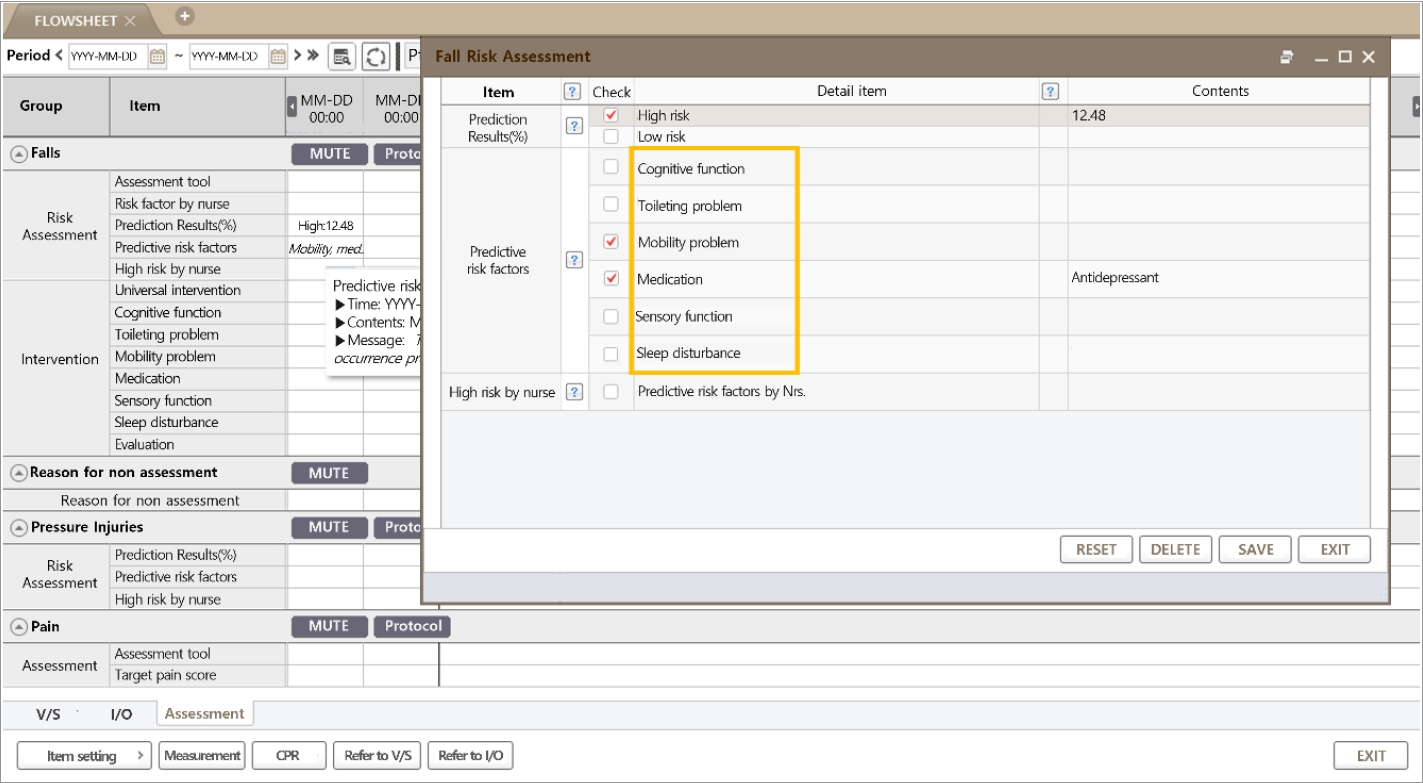

To apply the ML model to the clinical environment, the same model structure was developed for EMR integration. Seven categorized contributing factors to falls were identified at the patient level using the Shapley value, which has been widely used in game theory literature for calculating the contribution of each player in the game [22]. In terms of prediction modeling area, the Shapley value can be used to compute the contribution of each data point to the model’s final performance and can be visualized with the SHAP force plot as shown in Fig. 2. The ML model, which suggests patient risk factors according to the Shapley value, was integrated into the EMR, and screen development was implemented for actual clinical settings.

Patient level example of SHAP force plot for individualized prediction result for fall prediction. Each value indicates the contribution of each feature for fall prediction; positive value represents positive contribution.

Two components, the predictive probability of falls and the predictive risk factor, were presented on the EMR screen. According to the set threshold, the patient was classified as high or low risk, and the top three categorized feature contributions were selected for the preceding intervention. If the patient was classified as low risk but the nurse did not agree, a rating of high risk was allowed. If the nurse agreed on the predictive result and the risk factors, the recommended intervention was performed and analyzed. When a low-risk patient became a high-risk patient, a popup on the screen informed the nurse of the status change.

RESULTS

Basic characteristics

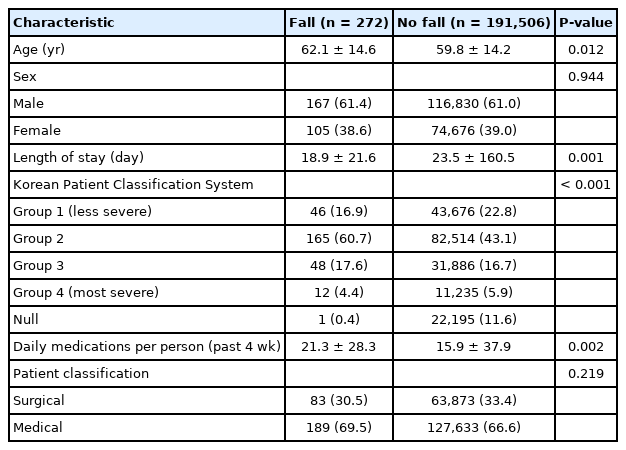

Initially, 257,140 cases between January 2018 and March 2020 were included. A total of 65,362 cases was excluded due to age younger than 18 years, admission to an undefined general ward, events occurring on admission day, or multiple falls during the same admission. A total of 191,778 cases (272 cases [0.14%] in the group with falls) was used for the final data analysis. The flow diagram of the study process is shown in Fig. 3.

Basic characteristics of patients are listed in Table 2. During the study period, 272 fall cases (0.14%; mean age±standard deviation, 62.1±14.6 years; male patients, 167 [61.4%]) were reported. There were more male and elderly patients in the fall group and patients were more likely to take medication and have a secondary diagnosis.

ML models

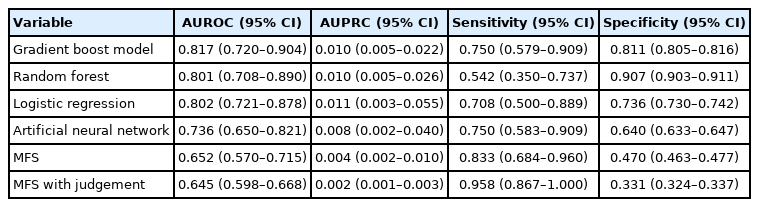

An ML-based fall prediction algorithm was developed. Table 3 summarizes the AUROC, AUPRC, and other metrics using various methods with 95% CI. The best AUROC and AUPRC were 0.817 (95% CI, 0.720–0.904) and 0.010 (95% CI, 0.005–0.022), respectively, for the gradient boost model. The AUROC plot is shown in Fig. 4.

Comparison of area under the receiver operating characteristic (AUROC) curves for gradient boost model (GBoost), logistic regression, random forest, artificial neural network (ANN), Morse Fall Scale (MFS), and MFS with judgement.

According to the cutoffs determined by the Youden index, the sensitivities (95% CI) of the gradient boost model, random forest, logistic regression, artificial neural network, MFS, and MFS with judgement 0.750 (0.579–0.909), 0.542 (0.350–0.737), 0.708 (0.500–0.889), 0.750 (0.583–0.909), 0.833 (0.684–0.960), and 0.958 (0.867–1.000), respectively; and the specificities (95% CI) were 0.811 (0.805–0.816), 0.907 (0.903–0.911), 0.736 (0.730–0.742), 0.640 (0.633–0.647), 0.470 (0.463–0.477), and 0.331 (0.324–0.337), respectively.

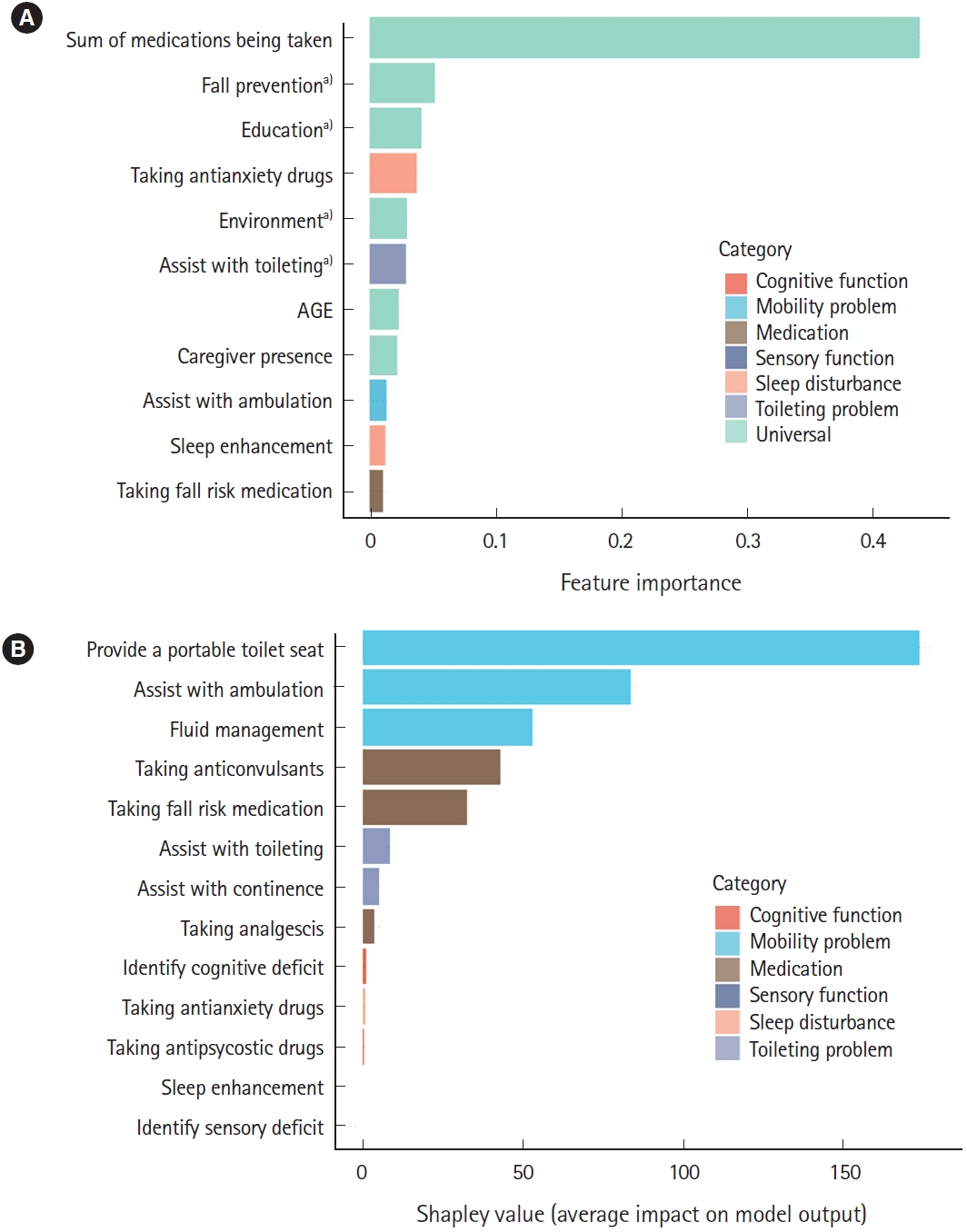

The contribution of the event to the seven categorized factors was identified in terms of population and patients, as shown in Fig. 5. Number of medications taken, number of nursing interventions for fall prevention, and education were the most influential factors in predicting falls at the population level.

Population-level and patient-level interpretations for fall events. (A) Population-level interpretation with feature importance by gradient boosting. (B) Patient-level interpretation with Shapley value. a)Nursing intervention.

Regarding the patient-level prediction, as shown in Fig. 5B, patient risk was demonstrated using individual factors and their Shapley values. The patient-level predictors are displayed in the EMR (Fig. 6). The reason for positive fall prediction was displayed as a binary output (checkbox) of cognitive function, toileting problems, mobility problems, medication, sensory function, and sleep disturbance.

DISCUSSION

In this study, an interpretable ML for fall event prediction was developed using 83 predictors from EMR data, with the gradient boost model demonstrating the highest performance. Predictors were categorized as fall risk factors and incorporated into the EMR. This can be the cornerstone for development of an artificial intelligence (AI)-based clinical decision support system (CDSS) for application to the clinical field.

Patients in this study who experienced falls tended to be older, were hospitalized longer, and used more medications. These results are consistent with the characteristics of patients at risk for falls [14,18]. All these characteristics were used as significant predictors.

The model performance of this study was AUROC 0.817, which was higher than that of previous studies [13,14]. Medication and nursing intervention were important predictors, consistent with a previous study [18]. Lower limb weakness and dysuria were the strongest predictors in previous studies [14,19]; assistance toileting and ambulation nursing interventions also were high predictors and were similarly consistent. Another study showed that admission data were high in feature importance, but they input more variables to reflect specific patient conditions [14]. The present study developed a model including formally reported falls as well as falls that were recorded only in clinical notes. Data that reflect patient status at the time of a fall were used based on a previous study [19]. Using these, the model sensitivity and specificity could be improved for model accuracy and for accuracy in clinical application settings.

Interpretable ML is critical for nursing practice application [23,24]. Accuracy and actionable intervention are important in the case of in-hospital falls as they might result in lawsuits. The findings of this study will enable identification of risk factors that can guide individualized interventions in fall prevention.

Although many ML algorithms have been developed to predict falls, pressure injuries, and delirium [13,23,25], there has been no study that is integrated with and applied to the actual EMR. Prospective studies could be evaluated to determine the applicability and usefulness of AI-based CDSS for future studies.

AI for patient safety is a very impactful and effective area of study and practice. AI can be extended as a valuable tool to improve patient safety in multiple clinical settings including healthcare-associated infections, adverse drug events, venous thromboembolism, surgical complications, pressure ulcers, falls, decompensation, and diagnostic errors [24].

This study had some limitations. First, the study was performed in a single center in a retrospective manner. To verify model performance, further large clinical datasets and multicenter validation are required. Prospective studies could be evaluated to verify the algorithm performance with the CDSS, which involves evaluation of its effectiveness and usability in work processes and safety outcomes.

Second, in terms of predictors, the dataset used in this study was based on routinely collected EMR variables. Thus, other factors such as environmental and behavioral conditions, which are important but not often recorded in the EMR, could not be utilized.

Finally, fall outcomes were collected only through EMR reports. Near-miss cases such as stumbling and sliding, which had not been reported or recognized by providers, have potential importance. Further studies should involve sensors and patient reports about such cases.

In conclusion, the present study developed an interpretable ML prediction model for fall events that was integrated into the EMR. This study is one of the first attempts to integrate AI-CDSS into practice on a large scale, and further studies are needed regarding effectiveness and safety.

Notes

CONFLICT OF INTEREST

No potential conflict of interest relevant to this article was reported.

FUNDING

None.

AUTHOR CONTRIBUTIONS

Conceptualization: SH; Data curation: SJ, YJS, KTM; Formal analysis: SJ, YJS, KTM; Investigation: SS, JYY, JHL, KMY, SHP, ISC, MRS, JHH; Methodology: SJ, YJS, KTM; Visualization: SS, JYY, SJ, YJS, KTM; Writing–original draft: SS, JYY, SH; Writing–review & editing: SS, JYY, SH, JHL, KMY, SHP, ISC, MRS, JHH.

All authors read and approved the final manuscript.

Acknowledgements

The authors thank all the participants in the expert interviews and the survey for their assistance during this study.

References

Article information Continued

Notes

Capsule Summary

What is already known

Conventional scores such as the Morse Fall Scale are used for fall risk assessment; however, they have low accuracy and pose difficulty in practical implementation. Previous machine learning studies have limitations in explainability for clinicians and nurses, known as the “black box problem.” Another challenge is that previous models have not been well-prepared for electronic medical records integration for practical application.

What is new in the current study

We developed the interpretable machine learning model for prediction of falls and for integration into the electronic medical records system.