AbstractObjectiveFeedback is critical to the growth of learners. However, feedback quality can be variable in practice. Most feedback tools are generic, with few targeting emergency medicine. We created a feedback tool designed for emergency medicine residents, and this study aimed to evaluate the effectiveness of this tool.

MethodsThis was a single-center, prospective cohort study comparing feedback quality before and after introducing a novel feedback tool. Residents and faculty completed a survey after each shift assessing feedback quality, feedback time, and the number of feedback episodes. Feedback quality was assessed using a composite score from seven questions, which were each scored 1 to 5 points (minimum total score, 7 points; maximum, 35 points). Preintervention and postintervention data were analyzed using a mixed-effects model that took into account the correlation of random effects between study participants.

ResultsResidents completed 182 surveys and faculty members completed 158 surveys. The use of the tool was associated with improved consistency in the summative score of effective feedback attributes as assessed by residents (P=0.040) but not by faculty (P=0.259). However, most of the individual scores for attributes of good feedback did not reach statistical significance. With the tool, residents perceived that faculty spent more time providing feedback (P=0.040) and that the delivery of feedback was more ongoing throughout the shift (P=0.020). Faculty felt that the tool allowed for more ongoing feedback (P=0.002), with no perceived increase in the time spent delivering feedback (P=0.833).

INTRODUCTIONFeedback is important in all fields and is a critical aspect of medical training. In fact, the Accreditation Council of Graduate Medical Education (ACGME) declares feedback to be an essential and required component of resident training [1]. However, studies have demonstrated that current feedback quality can vary, leaving some learners and faculty dissatisfied with the adequacy of the feedback they receive [2–7]. This can be particularly challenging in the emergency department (ED) setting due to time constraints, frequent interruptions, high patient acuity, and learners at multiple stages of training [8,9].

To be effective, feedback should be goal-oriented, constructive, based on observed activities, and timely [10]. It should also focus on specific elements of performance, address how the task was done, and provide guidance to help learners grow beyond their current competence [11]. It is important as well to consider the relationship between the feedback giver and receiver. Borrowing from the psychological concept of a therapeutic alliance, an “educational alliance” is a conceptual framework that incorporates a mutual understanding of educational goals with an agreement on how to work toward those goals [6]. Learners can engage in reflective conversations to relate their self-assessment with educator observations. An educational alliance is strengthened when these discussions are held regularly and often by individuals who exhibit trust and mutual respect. Learners engaged in these alliances are more likely to use the feedback they receive effectively [8,11–16]. However, in the ED setting, feedback is more commonly delivered at the end of the shift in a summative format, frequently using a Milestones-based checklist [17]. This limits the ability to integrate the feedback or sustain the educational alliance since the learner’s next ED shift is often with a different faculty member [8].

Feedback is not always focused on or formally taught as part of graduate medical education, so clinical faculty may not have significant training in the matter. Furthermore, many faculty may not have the time to prioritize keeping up to date with evolving literature in medical education given their other clinical and administrative commitments [18–22]. This can lead to significant variability in how feedback is delivered and result both in learner dissatisfaction with the quality of feedback provided and missed opportunities for growth and development [23–25].

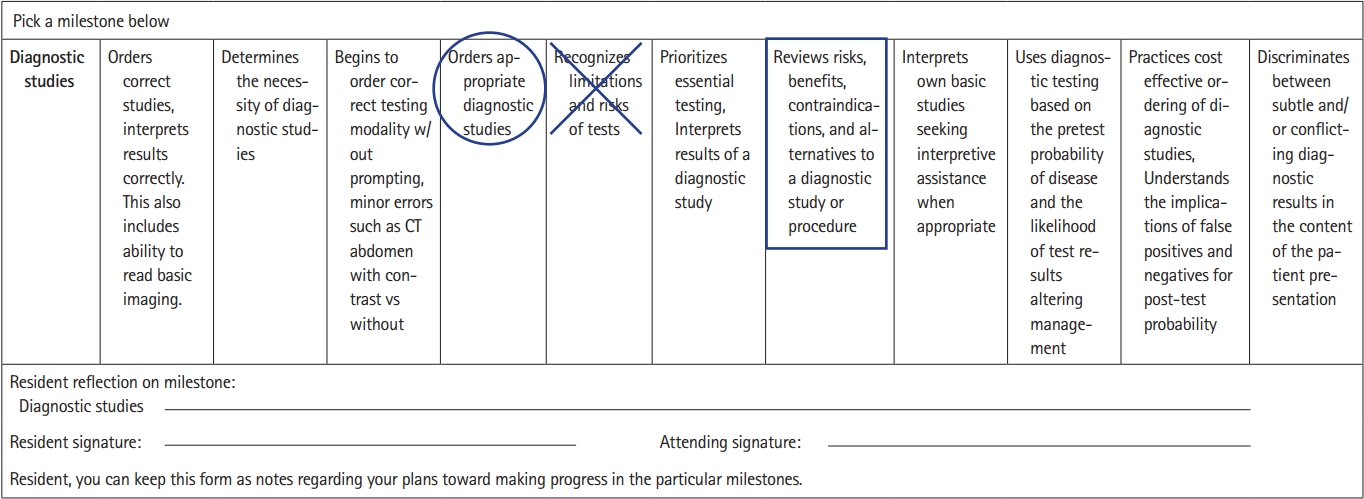

To address this need, we developed a novel feedback tool (Fig. 1) to guide feedback delivery and allow opportunities for integration into the shift. Using a structured tool, residents could identify their specific learning objectives from a full list modeled after the Emergency Medicine (EM) Milestones [26]. Informed by Kolb’s theory of experiential learning, the residents then receive realtime feedback on specific instances after a patient encounter, alter their practice, and see if any changes they made are effective [8,27,28]. Having the learner choose the specific skills in an organized system, with clearly defined and achievable goals to work on during their shift, may prevent defensive reactions and better facilitate learning [5,23]. This could also allow the learner and faculty member to visually track improvements to enable a more comprehensive summative evaluation at the end of the shift.

Our primary goal was to evaluate the impact of a novel tool on the overall consistency in providing attributes of effective feedback in a cohort of EM residents and faculty. A subgroup analysis was planned to evaluate the consistency with regard to specific attributes of effective feedback. Secondary outcomes included differences in perceived feedback timing (i.e., how long feedback takes) and frequency.

METHODSEthics statementThe study was approved by the Institutional Review Board of Rush University Medical Center (No. 19031105-IRB01). Informed consent was obtained from all interested participants, and all methodologies and procedures were conducted in line with the Declaration of Helsinki guidelines.

Study settingThis was a single-center, prospective cohort study comparing a composite feedback score before and after a novel feedback tool was introduced. The study was conducted at Rush University Medical Center, a 3-year EM residency program at an urban academic center in Chicago, Illinois, USA, and enrolled 36 EM residents and 38 EM faculty members. All EM resident and faculty physicians were eligible for inclusion in the study (with the exception of the authors), though survey completion was optional. We excluded medical students and non-EM residents. All faculty are trained in EM.

Study designThe preintervention phase occurred from August 24, 2020 to October 8, 2020. During this period, faculty gave residents feedback based on the existing end-of-shift evaluation model used in our department. This consisted of an electronic end-of-shift card, which was informed by the EM Milestones. Feedback was not standardized across faculty, and they had not received any specialized training. During the preintervention time period, residents and faculty completed a survey evaluating their feedback experience after each shift (Supplementary Materials 1, 2). Survey reminders were posted throughout the ED, and individualized emails were sent to resident and faculty physicians before each shift.

We reviewed the literature to identify components of effective feedback and existing feedback-assessment tools. We identified a paucity of existing feedback-assessment tools appropriate for use in this study; therefore, a new tool was created. Based on a thorough review of existing literature, we determined that high-quality feedback should be tangible, goal-referenced, actionable, personalized, timely, ongoing, and consistent [8,10]. We drafted a survey to assess these specific components, with the cumulative summary score of all seven aforementioned elements serving as our primary outcome.

The survey was then piloted and iteratively refined by the authors. Content validity was determined by discussion among attending ED physicians, including an assistant program director, associate dean of the medical college, and core faculty members, which included two individuals with extensive experience publishing and presenting on feedback. Response process validity was determined by piloting the survey on two attending physicians, including one core faculty and one noncore faculty member. The survey included seven questions evaluating the feedback quality (Supplementary Material 1), which were assessed using a Likert scale of 1 (strongly disagree) to 5 points (strongly agree). The consistency in providing attributes of effective feedback (feedback quality) was assessed as a summative score, with a total minimum of 7 points and total maximum of 35 points. The survey also asked about the time spent on feedback (<1, 1–3, 3–5, 5–7, or >7 minutes) and the number of feedback instances per shift (0, 1, 2, 3, 4, or ≥5). Study data were collected and managed using Research Electronic Data Capture (REDCap) electronic data capture tools. REDCap is a secure, web-based software platform designed to support data capture for research studies that provides an intuitive interface for validated data capture, audit trails for tracking data manipulation and export procedures, automated export procedures for seamless data downloads to common statistical packages, and procedures for data integration and interoperability with external sources [29].

From August 24, 2020 to October 8, 2020, we trained our residents and faculty on the new feedback tool. Training was 30 minutes in length and covered only the use of the feedback tool. We did not conduct specific training regarding feedback best practices or other faculty development during the entire study time period. Faculty were educated on the use of the feedback tool during a faculty meeting with most faculty present, while residents were educated during their conference day. Any absent resident or faculty member was sent both a video and verbal explanation of the feedback tool. After allowing time for training and uptake, we collected postintervention data from October 15, 2020 to March 19, 2021, using the same process described above for the preintervention period.

Feedback toolThe feedback tool was developed based on EM Milestones ver. 1.0 (Supplementary Material 3) [26]. All milestones were included, and each milestone was split into 10 strata based on the five levels and criteria described in the EM Milestones document. We chose 10 strata to provide a wide berth of options for faculty and residents to rate their skill level. Each milestone had its own separate form and was paper-based to facilitate ease of completion and collection. Because data suggest that feedback may be better received when the message is presented conceptually in a visual manner [30], we used a visual scale to track progress directly (Fig. 1).

Prior to each shift, residents selected two milestones on which to focus for the shift. Blank feedback tool forms were stored in a folder near the resident and faculty workstations. Before seeing patients, residents would circle their self-perceived level for both milestones and have a conversation with the faculty about what they needed to do to get to the next level. Midway through the shift, the resident and faculty would revisit the document to see if progress had been made on each milestone. An “X” was placed on the visual scale to indicate where they thought they were at that point in time, prompting another conversation on opportunities for improvement. At the end of the shift, the resident marked the visual scale with a square to denote where they thought they had ended up. This response was independent of the end-of-shift evaluations completed by attendings, separating this feedback process from the formal evaluation process.

Statistical analysisA dependent means sample size calculation indicated 140 assessments were needed based on an alpha value of 0.05, power of 80%, and mean total score difference of 1 between the preintervention and postintervention arms. The normality of data was assessed by visual inspection of histogram plots. We report descriptive statistics for the participant responses using median with interquartile range (IQR) values. Preintervention and postintervention data were analyzed using a linear mixed-effects model that took into account the correlation of random effects between study participants and reported as mean estimates with standard deviations. An a priori, two-sided, P-value of <0.05 was considered statistically significant. Comparative data were reported as differences and 95% confidence interval values. A post hoc Bonferroni correction was completed for the subanalyses and set at P<0.005 given the use of one model per the 10 strata evaluated (i.e., original alpha value of 0.05 divided by 10). Analyses were performed using IBM SPSS ver. 22.0 (IBM Corp).

RESULTSThirty-one residents and 35 faculty participated in the study. In the preintervention period, residents completed 101 total surveys, with a median of four surveys (IQR, 2–6) per person, while faculty completed 94 surveys, with a median of three surveys (IQR, 1–5) per person. In the postintervention period, residents completed 81 total surveys, with a median of two surveys (IQR, 1–4) per person, while faculty completed 64 surveys, with a median of three surveys (IQR, 2–4) per person. Characteristics of the participant groups are noted in Table 1.

The resident data suggested that there was a significant improvement in the composite feedback score after the intervention (linear mixed-model mean estimate, preintervention=26.6/35.0 vs. postintervention=28.2/35.0; P=0.041) (Table 2). Compared to before the implementation of the feedback tool, residents perceived that the faculty spent more time providing feedback (preintervention=3.1/5.0 vs. postintervention=3.4/5.0, P=0.036) and that feedback was more ongoing throughout the shift (preintervention=3.5/5.0 vs. postintervention=3.9/5.0, P=0.023).

In the faculty group, the difference in the overall composite feedback score was not statistically significant (preintervention=26.2/35.0 vs. postintervention=26.9/35.0, P=0.259) (Table 3). Faculty felt that the tool led to more ongoing feedback over the course of the shift (preintervention=3.3/5.0 vs. postintervention=3.8/5.0, P=0.002) without a perceived increase in time spent delivering feedback (P=0.833).

DISCUSSIONAs medical education continues to advance and new generations of medical learners transform the ways in which they acquire knowledge, it is critical that the ways in which feedback is given to these learners also evolve [31,32]. Using our novel feedback tool, we found significantly increased consistency in the composite score of attributes of effective feedback (feedback quality) without a significant change in time perceived by faculty devoted to delivering feedback.

Prior literature has focused primarily on faculty development sessions to improve feedback delivery, with fewer studies focusing on supporting tools. One study used a training session on feedback delivery paired with a reminder card and booklet for documentation of noted observations and found a modest improvement in written evaluations and improvement in residents’ perception that feedback would impact their clinical practice [33]. Another study used an extensive training session coupled with a skills checklist to be completed in observed encounters and found that these interventions improved how specific the content of feedback was and that direct observation was viewed by residents as a valuable aspect of their training [32]. These studies, however, required dedicated faculty coaching and time commitments for the observations, which may be more challenging to secure in the ED setting [33,34]. Other studies have focused on providing tools that can increase the ease with which resident evaluations can be completed, whether using app-based systems or QR codes; these studies have primarily focused on increasing the number of evaluations completed rather than on the feedback itself [35–38]. While increasing the quantity of feedback may be important, unintended consequences, such as degrading the process into one of “form filling” and “checking boxes,” may occur [39]. Most importantly, many of the studies on feedback interventions and tools were conducted outside the ED environment and were limited by their retrospective or qualitative design, with few prospective case-control studies, further highlighting the need for an ED-specific tool.

We believe there are several unique benefits to our tool. One of the main individual attributes of effective feedback that did reach statistical significance in both the faculty and resident groups was “my feedback was ongoing.” We believe that having an interactive, physical tool available throughout the shift may be a key to navigating the challenge of the busy ED with frequent interruptions. A visible feedback tool allows the learner and facilitator to be reminded of the need to have continued conversations related to resident performance. This tool also allows learners to choose their learning goals as well as to reflect on where they stand and how they are progressing, thereby moving the feedback session from a unilateral delivery of feedback to a bilateral discussion [6]. It also emphasized self-reflection and accountability to the process by using clear anchors and a visual tool. Finally, the tool standardizes the approach to giving feedback, is simple to use, and aligns with the existing Milestones framework while simultaneously necessitating only minimal formal training for residents and faculty.

Interestingly, most of the individual attributes of effective feedback did not reach statistical significance independently. As a subgroup analysis, this study was not powered for the analysis of the specific components; therefore, it is possible that it may have been underpowered to detect a difference in the individual feedback components. Alternatively, while having set goals chosen at the beginning of the shift in general can improve the ability to provide concrete feedback, it becomes challenging when the chosen goals are not addressed during the shift. In order to address this, residents were asked to pick a pair of milestones to discuss during the shift so there was a greater chance of having something relevant to provide feedback on. We did not, however, keep track of which milestones were more likely to be selected, if the milestones were applicable to the shift experience, or if residents were given feedback on the full breadth of milestones. This may have contributed to the lack of statistical significance in certain individual scores of effective feedback. For instance, the individual attribute “my feedback was tangible” relies on having instances during the shift that are applicable to the specific milestone chosen. In the future, it may be beneficial to assign several milestones to each shift to ensure residents have a greater chance of receiving feedback on clinically applicable milestones, which may lead to improvement in the scores of the individual attributes of effective feedback. Additionally, removing some milestones that are better assessed outside of the clinical setting from the pool of possible milestones to give feedback on may improve the relevance and effectiveness of the feedback given.

Overall, the use of an interactive feedback delivery tool improved consistency in attributes of effective feedback without impacting the perceived time to deliver feedback. Many of the individual attributes of effective feedback did not reach clinical significance, and future research is needed to evaluate the validity of this tool in other settings and among different learner groups.

There are several important limitations to consider with this study. First, this was conducted at a single EM residency program, and future studies are needed to assess the external validity of the tool itself as well as the findings on its effects on the cumulative attributes of effective feedback. In the future, it would also be beneficial to directly measure the amount of time required to utilize the tool and provide feedback, as some of the responses to questions relied on the subjective assessment of time which is subject to recall bias. In addition, it may be helpful to ask direct questions regarding ease of use and perceived intrusions on workflow. Additionally, this study was conducted using a pre-post design. While there were no feedback interventions other than the tool performed during this time period and no new faculty hired, it is possible that faculty feedback may have improved over time. Another limitation is that this tool was derived using the prior iteration of the Milestones, which have recently been revised. However, as the intervention focused on the delivery model, rather than the specific Milestone categories, we do not anticipate this to significantly impact the findings. Moreover, responses were voluntary, and it is possible this may have led to selection bias. Finally, the outcomes assessed the impact on a cumulative feedback score but did not assess the impact on patient care or educational significance. While statistically significant, the clinical difference of a mean total score increase of 1 point is unclear, and future studies should ascertain the threshold of a clinically significant difference. Future studies should also assess this among non-EM specialties using specialty-specific Milestones. Studies should also assess this longitudinally, evaluating for the impact on resident performance and potential implications for remediation and competency-based advancement assessments.

SUPPLEMENTARY MATERIALSupplementary materials are available from https://doi.org/10.15441/ceem.22.395.

NOTESETHICS STATEMENTS

The study was approved by the Institutional Review Board of Rush University Medical Center (No. 19031105-IRB01). Informed consent was obtained from all interested participants.

AUTHOR CONTRIBUTIONS

Conceptualization: all authors; Data curation: all authors; Formal analysis: GDP; Investigation: all authors; Methodology: all authors; Project administration: all authors; Supervision: KMG, MG; Writing–original draft: all authors; Writing–review & editing: all authors. All authors read and approved the final manuscript.

ACKNOWLEDGMENTSThe authors thank the emergency medicine residents and faculty at Rush University Medical Center (Chicago, IL, USA) for their assistance with the conduct of this study.

REFERENCES1. Edgar L, McClean S, Hogan SO, Hamstra S, Holmboe ES. The Milestones guidebook. Accreditation Council for Graduate Medical Education (ACGME); 2020.

2. Johnson CE, Keating JL, Boud DJ, et al. Identifying educator behaviours for high quality verbal feedback in health professions education: literature review and expert refinement. BMC Med Educ 2016; 16:96.

3. Jackson JL, Kay C, Jackson WC, Frank M. The quality of written feedback by attendings of internal medicine residents. J Gen Intern Med 2015; 30:973-8.

4. Bing-You R, Hayes V, Varaklis K, Trowbridge R, Kemp H, McKelvy D. Feedback for learners in medical education: what is known? A scoping review. Acad Med 2017; 92:1346-54.

5. Bowen L, Marshall M, Murdoch-Eaton D. Medical student perceptions of feedback and feedback behaviors within the context of the “educational alliance”. Acad Med 2017; 92:1303-12.

6. Telio S, Ajjawi R, Regehr G. The “educational alliance” as a framework for reconceptualizing feedback in medical education. Acad Med 2015; 90:609-14.

7. Bentley S, Hu K, Messman A, et al. Are all competencies equal in the eyes of residents? A multicenter study of emergency medicine residents’ interest in feedback. West J Emerg Med 2017; 18:76-81.

8. Buckley C, Natesan S, Breslin A, Gottlieb M. Finessing feedback: recommendations for effective feedback in the emergency department. Ann Emerg Med 2020; 75:445-51.

9. Chaou CH, Monrouxe LV, Chang LC, et al. Challenges of feedback provision in the workplace: a qualitative study of emergency medicine residents and teachers. Med Teach 2017; 39:1145-53.

11. Lefroy J, Watling C, Teunissen PW, Brand P. Guidelines: the do’s, don’ts and don’t knows of feedback for clinical education. Perspect Med Educ 2015; 4:284-99.

12. Ramani S, Konings KD, Ginsburg S, van der Vleuten CP. Twelve tips to promote a feedback culture with a growth mind-set: swinging the feedback pendulum from recipes to relationships. Med Teach 2019; 41:625-31.

13. Eppich WJ, Hunt EA, Duval-Arnould JM, Siddall VJ, Cheng A. Structuring feedback and debriefing to achieve mastery learning goals. Acad Med 2015; 90:1501-8.

14. Kraut A, Yarris LM, Sargeant J. Feedback: cultivating a positive culture. J Grad Med Educ 2015; 7:262-4.

15. Huffman BM, Hafferty FW, Bhagra A, Leasure EL, Santivasi WL, Sawatsky AP. Resident impression management within feedback conversations: a qualitative study. Med Educ 2021; 55:266-74.

16. Molloy E, Ajjawi R, Bearman M, Noble C, Rudland J, Ryan A. Challenging feedback myths: values, learner involvement and promoting effects beyond the immediate task. Med Educ 2020; 54:33-9.

17. Gottlieb M, Jordan J, Siegelman JN, Cooney R, Stehman C, Chan TM. Direct observation tools in emergency medicine: a systematic review of the literature. AEM Educ Train 2020; 5:e10519.

18. Holmboe ES, Ward DS, Reznick RK, et al. Faculty development in assessment: the missing link in competency-based medical education. Acad Med 2011; 86:460-7.

19. Kogan JR, Conforti LN, Bernabeo EC, Durning SJ, Hauer KE, Holmboe ES. Faculty staff perceptions of feedback to residents after direct observation of clinical skills. Med Educ 2012; 46:201-15.

20. Kornegay JG, Kraut A, Manthey D, et al. Feedback in medical education: a critical appraisal. AEM Educ Train 2017; 1:98-109.

21. Natesan SM, Krzyzaniak SM, Stehman C, Shaw R, Story D, Gottlieb M. Curated collections for educators: eight key papers about feedback in medical education. Cureus 2019; 11:e4164.

22. Natesan S, Stehman C, Shaw R, Story D, Krzyzaniak SM, Gottlieb M. Curated collections for educators: five key papers about receiving feedback in medical education. Cureus 2019; 11:e5728.

23. Bing-You RG, Trowbridge RL. Why medical educators may be failing at feedback. JAMA 2009; 302:1330-1.

24. Moss HA, Derman PB, Clement RC. Medical student perspective: working toward specific and actionable clinical clerkship feedback. Med Teach 2012; 34:665-7.

25. Sender Liberman A, Liberman M, Steinert Y, McLeod P, Meterissian S. Surgery residents and attending surgeons have different perceptions of feedback. Med Teach 2005; 27:470-2.

26. Accreditation Council for Graduate Medical Education (ACGME). Emergency Medicine Milestones. ACGME; 2021.

27. Kolb DA. Experiential learning: experience as the source of learning and development. Prentice Hall; 1984.

28. Vafaei A, Heidari K, Hosseini MA, Alavi-Moghaddam M. Role of feedback during evaluation in improving emergency medicine residents’ skills; an experimental study. Emerg (Tehran) 2017; 5:e28.

29. Harris PA, Taylor R, Minor BL, et al. The REDCap consortium: building an international community of software platform partners. J Biomed Inform 2019; 95:103208.

30. Brehaut JC, Colquhoun HL, Eva KW, et al. Practice feedback interventions: 15 suggestions for optimizing effectiveness. Ann Intern Med 2016; 164:435-41.

31. Schwartz AC, McDonald WM, Vahabzadeh AB, Cotes RO. Keeping up with changing times in education: fostering lifelong learning of millennial learners. Focus (Am Psychiatr Publ) 2018; 16:74-9.

32. Natesan S, Jordan J, Sheng A, et al. Feedback in medical education: an evidence-based guide to best practices from the Council of Residency Directors in Emergency Medicine. West J Emerg Med 2023; 24:479-94.

33. Holmboe ES, Fiebach NH, Galaty LA, Huot S. Effectiveness of a focused educational intervention on resident evaluations from faculty a randomized controlled trial. J Gen Intern Med 2001; 16:427-34.

34. Hamburger EK, Cuzzi S, Coddington DA, et al. Observation of resident clinical skills: outcomes of a program of direct observation in the continuity clinic setting. Acad Pediatr 2011; 11:394-402.

35. Chen F, Arora H, Zvara DA, Connolly A, Martinelli SM. Anesthesia myTIPreport: a web-based tool for real-time evaluation of accreditation council for graduate medical education’s milestone competencies and clinical feedback to residents. A A Pract 2019; 12:412-5.

36. Snyder MJ, Nguyen DR, Womack JJ, et al. Testing quick response (QR) codes as an innovation to improve feedback among geographically-separated clerkship sites. Fam Med 2018; 50:188-94.

37. Connolly A, Goepfert A, Blanchard A, et al. myTIPreport and training for independent practice: a tool for real-time workplace feedback for milestones and procedural skills. J Grad Med Educ 2018; 10:70-7.

Fig. 1.Sample milestone-based tool. The resident decides before the shift where they feel they fall on the scale (circle). The resident decides midway through the shift how they are doing (marked as “X”). The resident and supervising physician decide where on the scale the resident performed (square). CT, computed tomography.

Table 1.Characteristics of the study population Table 2.Linear mixed model for resident data comparing feedback received before and after feedback tool implementation

Table 3.Linear mixed model for faculty data comparing feedback received before and after feedback tool implementation

|

|

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||